Innovation in UK Power Networks' Project Datum

At Avineon Tensing, we view innovation not as a singular event or a specific technology, but as a continuous, collaborative process ingrained in our operational ethos. It is the art of applying emergent technologies to solve practical business problems, creating measurable efficiencies and unlocking new value for our clients and our organisation. Our approach is founded on a partnership model, where we work alongside our clients to understand their deepest challenges and strategically deploy the most effective tools to address them. This philosophy is best illustrated by our multi-faceted and evolving work with UK Power Networks (UKPN) on their ambitious "Project Datum" initiative.

As a trusted partner and advisor to UKPN, one of the UK’s largest Distribution Network Operators, we’ve supported the project’s core objective of the comprehensive digitisation of the Eastern and London Power Networks. This work is a critical undertaking for modernising asset management, enhancing operational safety, and enabling future-focused network planning.

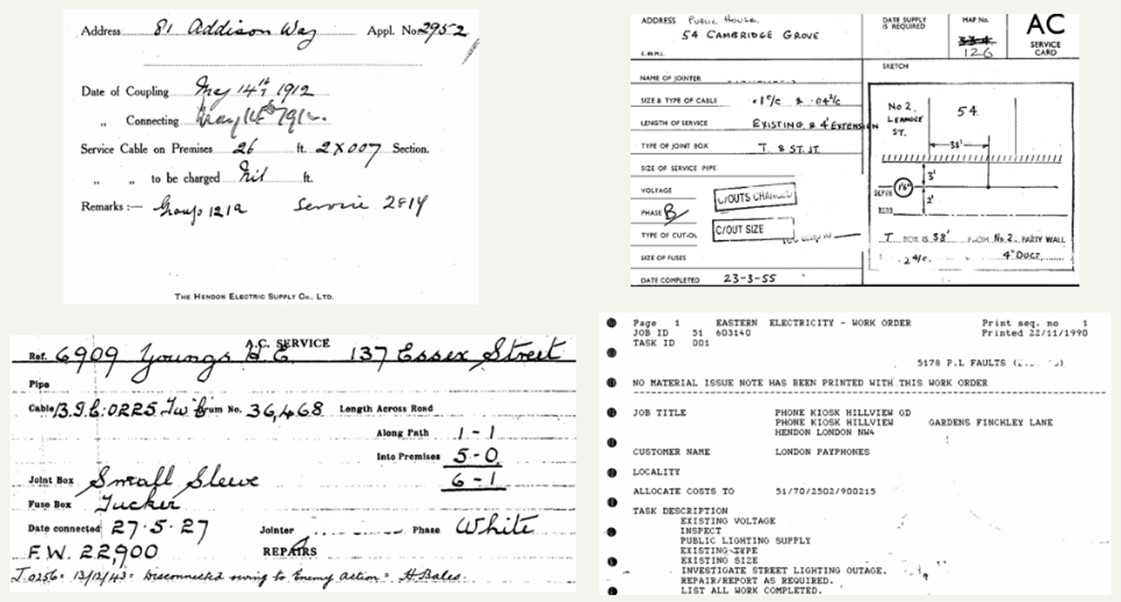

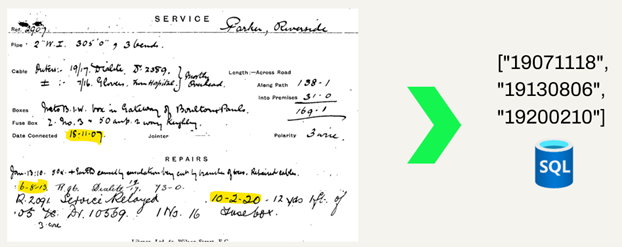

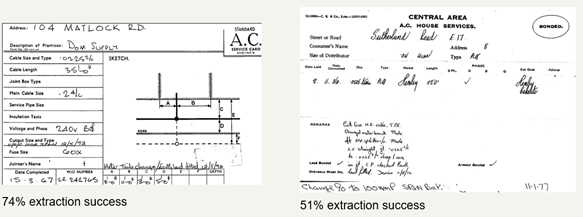

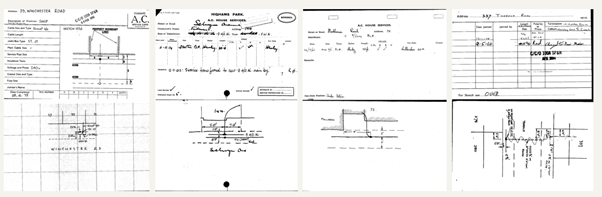

One component of the project is to utilise the digitised archive of over 4 million historical service record cards from Eastern and London Power Network areas. A Service Record Card (SRC) is a historical ledger detailing the specifics of an electrical service installation at a given property. This collection, spans from the dawn of the electrical age in the early 1900’s through to the early 1990s when capture became digitised. These records are still used today and contain crucial network information. However, this vital information is locked away in a variety of unstructured templates and scanned paper records. The records featured a mix of typed and handwritten entries, varying templates, faded ink and include complex engineering sketches. The layout, terminology, and even the quality of the handwriting can vary dramatically depending on the era and the individual engineer who completed the card. Within these millions of documents lies a rich and detailed history of the network's evolution, containing critical information for asset management, maintenance planning, and future investment strategies. However, in their raw, unstructured image format, this data is largely inaccessible and the value that can be gleaned is locked away.

Innovation Identification

Approximately two years into the five-year project, UKPN’s technical team identified a need to locate SRCs older than 1938 within the Eastern Power Network. This was due to historical materials and installation standards requiring additional attention. At the time, UKPN had limited visibility into the volume of such aged infrastructure, which was vital for building an accurate age profile of network assets, predicting failure rates, and prioritising investment.

The sheer volume of the records, coupled with the unstructured nature of the data, made a manual approach practically unfeasible. A preliminary analysis concluded that a purely manual data extraction approach would require an estimated 20 years of dedicated manual effort, with a timeline and cost that was simply untenable for UKPN. This presented a classic case where traditional methods were insufficient, creating a clear and compelling need for an innovative solution.

Business Case Formation and Presentation

Our team, through its analysis of the AI landscape, was aware of the rapid advancements in multimodal Large Language Models (LLMs). We identified Google's Gemini 1.5 Flash as a prime candidate for this task. Unlike traditional Optical Character Recognition (OCR) tools, which often struggle with varied handwriting and inconsistent document layouts, Gemini's multimodal capabilities meant it could process the entire image of the service card, understanding the spatial relationship between fields and values, and interpreting handwritten text with a high degree of accuracy. Furthermore, its 'Flash' designation signified a model optimised for speed and cost-efficiency, making it a perfect fit for a high-volume processing task.

Avineon Tensing put forward plans to extract the dates of installation and subsequent repairs from the 2.6 million service record cards.

We presented a clear value proposition:

- Reduction in project timelines from decades to months.

- Cost savings from millions in manual labour to hundreds of pounds in API usage.

- Increased data accuracy and consistency.

The proof-of-concept was executed entirely within FME. An FME workspace was created to read the sample images, call the Gemini API with a carefully crafted prompt, and write the extracted dates to a database. The results were then compared against a manually verified 'ground truth' dataset. The initial trial was very successful achieving an accuracy rate that gave UKPN the confidence to not only approve the full-scale rollout of the date extraction process but also to begin thinking about the broader potential of this technology.

Client Engagement

Avineon Tensing engaged UKPN’s Technical Team to define success criteria and select representative samples. We proposed a proof-of-concept to validate the approach, and collaborated closely throughout the process, ensuring transparency and alignment.

Testing Regime

A robust testing regime was key to ensuring the quality and reliability of the extracted data. Our approach went beyond simple spot checks and instead providing a framework for validation and continuous improvement.

The process began with the creation of a 'gold standard' dataset. A sample of several hundred service cards, representing the full range of templates, ages and handwriting styles which was manually extracted by our sister company Avineon India Private Ltd who had been working on the wider raster digitisation project. This dataset served as our ground truth for evaluating the performance of the AI models.

Our primary testing tool was FME itself. We developed dedicated FME Workspaces for testing. A Workspace would run the AI extraction process on the test dataset and then compare the AI-generated output against the gold standard data, field by field. The results were compiled into a detailed quality report, providing clear metrics on precision, recall, and overall accuracy for each attribute.

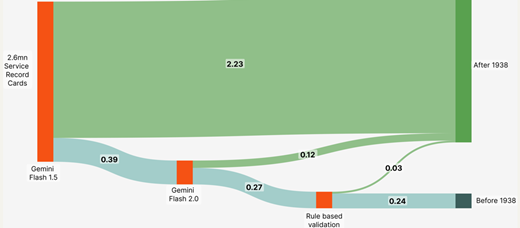

As Google released newer, more powerful versions of their Gemini models, we employed a champion-challenger methodology. We would run the new model (the challenger) against our existing model (the champion) using the same test dataset. The quality reports generated by FME provided an objective, data-driven basis for deciding when to upgrade. This ensured we were always using the most accurate and cost-effective model available. For example, we saw a discernible improvement in accuracy when moving from Gemini 1.5 to Gemini 2.0, as it offered a tangible improvement in the accuracy of handwriting recognition. It was, however, more expensive, so we decided to use the cheaper Gemini Flash 1.5 to screen the dataset initially, then use the more expensive Gemini Flash 2.0 against the smaller volume of pre-screened records to further validate the process.

Finally we embedded rule-based validation directly into the main production workflow in FME. This served as a real-time quality check. For example, we used regular expressions to validate date formats and attribute-based filters to flag records where the AI might have returned illogical values, such as a text string in a field that should be numeric. Any records that failed these validation checks were automatically routed for manual review, creating an efficient human-in-the-loop process. This hybrid approach, combining the scale of AI with the precision of rule-based logic and the nuance of human expertise, was key to achieving the project's high standards for data quality.

Delivery and Implementation

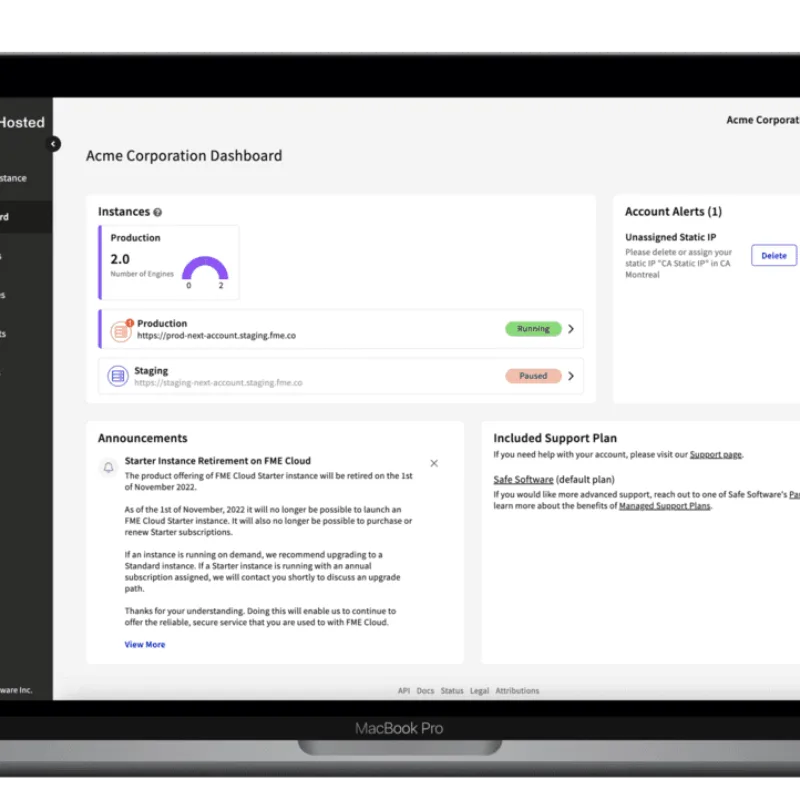

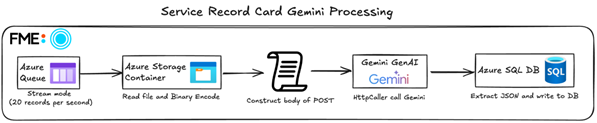

The delivery and implementation of the solution was orchestrated using the full capabilities of the FME Platform, ensuring a scalable, resilient and manageable data pipeline. The core of the solution was an FME workspace designed to process a single service record card. This workspace was then deployed to multiple FME Engines, allowing for massively parallel processing.

The end-to-end workflow was managed through a series of interconnected stages. It began with an FME workspace that listed all the image files from a secure FTP site, unzipped them, and loaded them into an Azure Storage Container. The file paths were then published as messages to an Azure Queue. This queuing mechanism was the key to our scalability. It decoupled the file listing process from the extraction process, allowing us to add or remove FME Engines to the processing farm as required, without interrupting the workflow.

The main processing workspace was triggered by messages from the Azure Queue. For each message, it would:

- Read the corresponding service card image from the Azure Storage Container.

- Perform any necessary pre-processing within FME, such as the image cropping for the computer vision step.

- Use a BinaryEncoder to prepare the image for the API call.

- Construct the detailed prompt and the body of the POST request, including the encoded image data and the structured JSON schema for the desired output.

- Call the Google Gemini API using the HTTPCaller transformer.

- Receive the JSON response and use transformers like the JSONExtractor and JSONFragmenter to parse the structured data.

- Perform the rule-based validation checks.

- Write the final, validated data to an Azure SQL Database.

Implementation with the FME Platform - For Avineon Tensing, FME and the partnership we have with Safe Software is key to how we undertake data integration challenges like this. It’s the platform that serves as the key system for our complex data solutions. FME provides a powerful, no-code graphical environment for designing and automating data workflows. Its strength lies in its unparalleled ability to connect to hundreds of different data formats, applications and web services, from legacy file formats to the most modern cloud APIs. It allows us to read, transform, validate, and write data between any number of systems.

For Project Datum, the FME Platform became the orchestration layer, the conductor of complex data movement and AI processing. It provided the agility to build, test and adapt our workflows rapidly, and the scalability, through FME Flow, to execute these processes on an industrial scale and in an enterprise environment. It’s this combination of a complex, unstructured data challenge in the form of the SRCs, and a powerful, flexible orchestration platform in FME, that created the perfect environment for innovation.

Benefits & Value

For UKPN:

The benefits delivered to UK Power Networks through Project Datum have been both immediate and enduring. By digitising over four million historical service record cards using advanced AI and orchestration technologies, UKPN has dramatically accelerated its data transformation efforts reducing timelines from decades to mere months. This has unlocked substantial cost savings and enabled the creation of a structured, queryable database that supports more informed asset management and investment decisions. The solution’s high accuracy, achieved through rigorous testing and validation, ensures data integrity while its scalable cloud-based architecture allows for efficient processing at industrial scale. Furthermore, UKPN now possesses a future-proof digital asset that enhances operational resilience and planning capabilities. Benefits include:

- Accelerated Digitisation: Reduced project timelines from an estimated 20 years of manual effort to just months through AI-driven automation.

- Significant Cost Savings: Lowered costs from millions in manual labour to hundreds of pounds in API usage.

- Improved Data Accessibility: Transformed millions of unstructured service record cards into structured, queryable data.

- Enhanced Asset Management: Enabled accurate age profiling of infrastructure, supporting predictive maintenance and investment planning.

- High Data Accuracy: Achieved strong precision and reliability through multimodal AI, rule-based validation, and human-in-the-loop processes.

- Scalable and Resilient Infrastructure: Delivered a robust, cloud-based data pipeline using FME Flow and Azure services.

- Operational Efficiency: Streamlined workflows and reduced manual intervention, freeing up resources for other strategic initiatives.

- Future-Proofing: Created a lasting digital asset that supports long-term network resilience and planning.

- Vendor Flexibility: Adopted an ‘any-AI’ approach allowing seamless switching between AI models for cost and performance optimisation to ensure the most cost-effective solution for UKPN.

For Avineon Tensing:

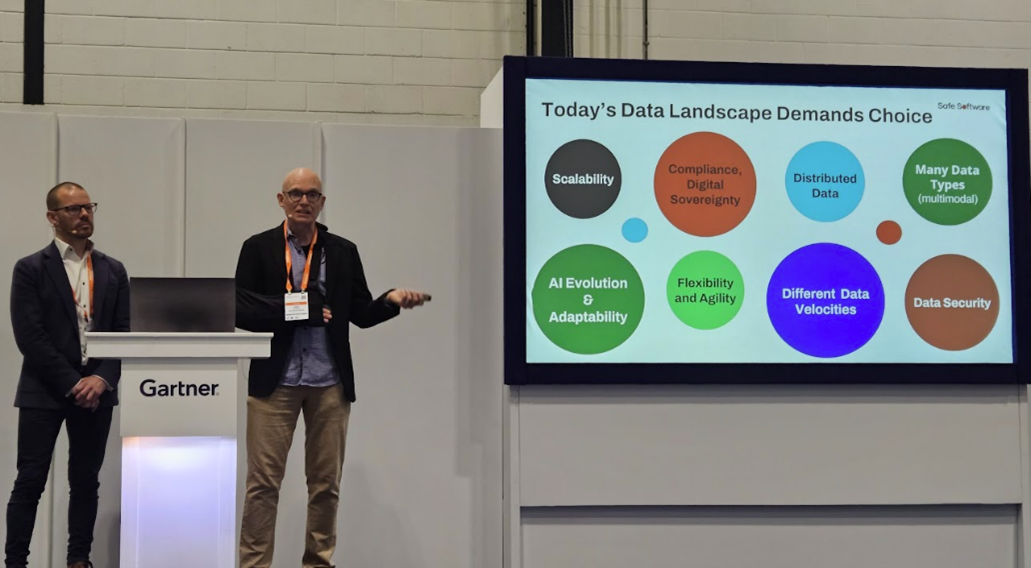

Project Datum has been a landmark initiative that has significantly advanced its reputation, capabilities, and strategic positioning. By delivering a complex, scalable solution to digitise millions of legacy records, we have demonstrated our leadership in applying cutting-edge technologies such as multimodal AI, computer vision, and orchestration platforms like FME. The project validated a robust methodology for tackling unstructured data challenges, which is now being applied across other sectors. The close collaboration with UK Power Networks has deepened our strategic partnership, whilst recognition from Safe Software and visibility at the Gartner Data and Analytics Conference have further cemented Avineon Tensing’s standing in the industry.

The story was showcased at the EMEA Gartner Data and Analytics Conference in London earlier this year, where UKPN CIO Matt Webb presented alongside Safe Software CEO Don Murray.

We’ve reinforced our reputation as a leader in innovative data solutions, strengthening our partnership with UK Power Networks to become their go-to partner for complex data integration. Using FME, we've created scalable and resilient workflows that reduce manual effort and increase delivery speed. This project has validated a repeatable methodology for AI-driven data extraction and orchestration, which we can now apply to other sectors. We've also demonstrated advanced use of multi-modal LLMs, proving that AI can deliver tangible value in projects like this.

Innovation Journey

Part 2: Expanding the Vision – From Dates to Full Digitisation

Successfully extracting dates gave us momentum, which was timely as we approached the London Power Network (LPN) area of the Datum project. Initially, the project aimed to use around 1 million geolocated Service Record Cards to extract and attribute supply point information, including voltage, cable type, joiners' names, service length, and phase. Our success using FME and Gemini together led to a more ambitious goal: to extract all technical information from every LPN service card, creating a complete structured digital twin of their historical network records.

This expanded scope significantly increased the project's complexity. We now needed to extract up to a dozen attributes, and their location varied across different card templates used over the decades. This is where FME's dynamic capabilities and the concept of 'agentic schema mapping' proved invaluable.

The Challenge: Overcoming a Fragmented GIS Landscape

WML’s operational foundation rested on an ageing GIS infrastructure that introduced significant business risk. Their core Smallworld NRM Water application was approaching its end-of-life, meaning it was no longer supported and would demand a costly, complex upgrade just to maintain functionality. This technological deadlock created a critical need for change, as continuing with the legacy system was no longer a viable or forward-thinking option for the organisation.

Beyond the technical obsolescence, the system fostered data fragmentation that directly undermined efficiency and strategic growth. Critical network data was replicated across multiple, disconnected applications, leading to synchronization issues, inconsistencies, and the absence of a unified operational picture. This lack of a single source of truth, combined with an inability to register assets beyond the primary water domain, created a barrier to WML’s ambition: to provide all stakeholders with reliable, anytime access to geographic intelligence and transition to truly data-driven decision-making.

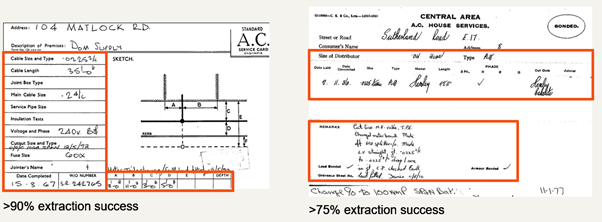

As powerful as these LLM’s were, a single ‘zero-shot’ prompt was not capable of extracting and classifying all data within the diverse array of Service Record Card templates. The results above are still impressive but we needed to try other techniques to improve extraction accuracy.

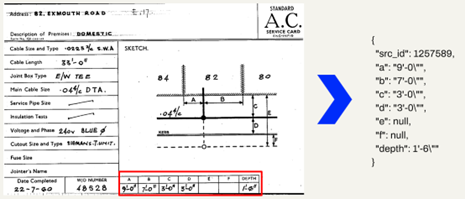

This is where we developed an agentic approach, the first step was to classify the SRC into a known template, then FME clipped the SRC against a pre-defined set of areas and passed each defined area to the Google Gemini multi-modal LLM with a target data schema in a JSON format.

This schema defined the required output fields, their data types, and provided descriptions to guide the AI's interpretation. For example, it would instruct the AI that 'Cable Length' should be a numeric value and describe how to handle different units of measurement. In essence, FME was dynamically providing the AI with the instructions and the template it needed to perform the schema mapping on the fly, transforming the unstructured chaos of the service card into a perfectly structured JSON object.

This agentic, orchestrated approach provided considerably better extraction results. Whilst additional API calls were required the low costs of the Google Gemini service made this an economically efficient method.

As before, the process is scalable, using cloud resources only as required. FME Flow orchestrates this expanded workflow. Images are clipped and placed into a message queue in Azure, and a pool of FME Engines then processes these messages in parallel for rapid processing, often reaching over 300 inference responses per minute. FME Workspaces handle the entire process for a single card: reading the image, breaking down its components, constructing prompts, calling the Gemini API, validating the returned JSON, and writing the structured data to a central Azure SQL Database.

We are now well into the LPN project, processing thousands of records monthly and continually improving the FME workflow to enhance extraction quality.

The world of Generative AI moves quickly, and we review alternative LLMs. The recent GPT5 release potentially offers lower costs than Google Gemini, and our solution's design makes switching easy within FME. This seamless ability to swap out the AI model by simply changing the endpoint in the HTTPCaller transformer is a core tenet of our 'any-AI' approach. It ensures our clients are not locked into a single vendor and can always benefit from the best available technology.

With each new release, we used our FME-based test harness for a champion-challenger evaluation. We processed our gold-standard dataset with the new model and compared the results against the incumbent.

The Challenge: Overcoming a Fragmented GIS Landscape

Part 3: A Machine Learning (ML) and Computer Vision (CV) approach for Complex Sketches

For the last few years, we’ve been developing our Computer Vision (CV) capabilities alongside our work with multi-modal LLMs. Historically, training CV models was incredibly intensive and fragile. However, new platforms and improved models have made fine-tuning and deploying them much easier.

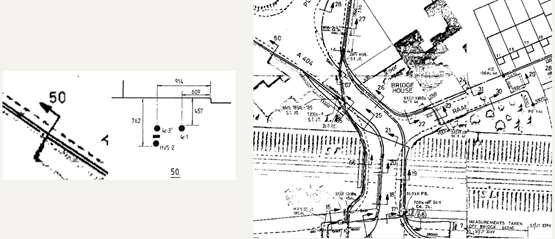

Within the Datum project, we needed to locate, associate, and extract cross-section information from UKPN's large raster datasets. These images use cross-sections, depicted as a bent arrow with an adjacent number to show the type and configuration of electrical ducts. Since using LLMs to find these features was unsuccessful, we turned to computer vision to solve the problem.

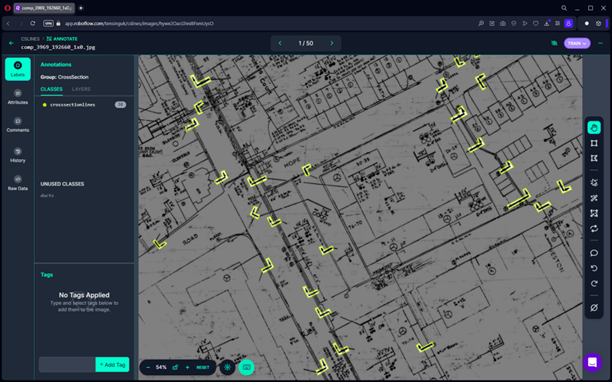

However, developing a custom computer vision model from scratch was an overly complex and resource-intensive process. In line with our philosophy of using the most efficient and accessible tools, we turned to Roboflow, a no-code platform for building and deploying computer vision models.

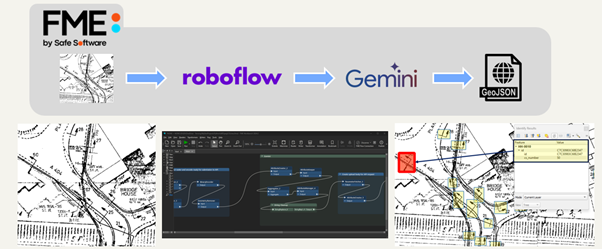

The innovation here was in the creation of a hybrid, multi-stage AI pipeline, orchestrated by FME. We broke the problem down into two distinct tasks. First, we needed to find the location of the cross-section symbols on the page. Using Roboflow's intuitive interface, we trained a computer vision model by annotating a few hundred examples of the cross-section symbols. This no-code approach allowed us to rapidly develop a highly accurate object detection model, without writing a single line of code. We then queried the fine-tuned model running on Roboflow’s inference API using FME, and then incorporated it into our FME workflow.

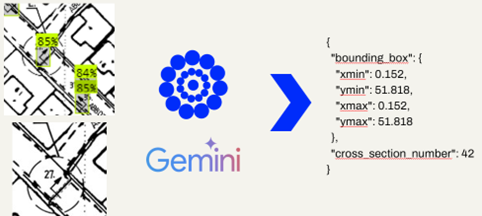

For the second step we needed to read the number written next to each bent arrow symbol that the CV model had identified. We tried to fine tune a model to achieve this, but it proved too unreliable. We therefore adopted Google Gemini to ‘read’ the number and extract it into JSON.

The enhanced workflow now operated as follows:

- For each service card image, FME first makes an API call to the deployed Roboflow model.

- Roboflow returns the bounding box coordinates for every cross-section symbol it detects on the image.

- FME then uses its powerful raster processing capabilities. For each set of coordinates returned by Roboflow, FME clips the original image, creating a small, focused sub-image containing just the cross-section symbol and its adjacent number.

- These smaller, highly contextual image snippets are then passed to the Google Gemini API.

- With this focused input, Gemini's OCR capabilities are able to extract the cross-section number with exceptional accuracy.

This chained approach, combines the strengths of a specialised computer vision model for location and a powerful generative AI model for text extraction, all managed and integrated by FME, represents a significant leap in data processing capability. It allowed us to extract a final, critical piece of information from the service cards, a piece of information that was inaccessible to any single AI model on its own. This successful fusion of different AI disciplines, made possible by the orchestration power of FME, is a perfect example of how we break down complex problems and apply the best tools for each component, delivering a solution that is greater than the sum of its parts.

This particular solution took 3 days to setup - both the Roboflow CV model training and FME Workspace development. This process is run a batch at a time to fit with the current digitisation workflow, it typically saves 3-4 hours per batch. So far we have completed over 135 batches saving 59 days of work, with over 380 batches remaining saving over 150 days of manual effort.

The cost of the Roboflow platform is $250 per month and the inference costs of the Google Gemini model is less than $5.

Recognised Benefits and Value Created

Using FME, AI, and Computer Vision has transformed how we approach UKPN's Project Datum, dramatically accelerating data digitisation.

Financially, the cost savings are substantial. The total expenditure on AI services is only a few thousand pounds, a negligible amount compared to the project's multi-million-pound cost and the expense of manual data handling. This significant return on investment allowed us to deliver the Eastern Power Network digitisation ahead of schedule and reallocate resources.

Beyond immediate savings, the project has created a valuable, lasting data asset. UKPN now has a fully structured, accurate, and queryable database of its historical network. This allows for previously impossible analysis, providing deep insights into asset lifecycles, maintenance patterns, and network vulnerabilities. This data-driven approach to asset management will lead to more efficient operations, targeted investments, and improved network resilience for years to come.

For Avineon Tensing, this project reinforced our position as a leader in innovative data solutions. It gave us a powerful and proven methodology for tackling large-scale, unstructured data challenges, which we are now applying to other sectors. Our close collaboration has also strengthened our strategic partnership with UKPN, positioning us as their go-to partner for complex data integration needs.

We are pleased to have been recognised by Safe Software in their recent Excellence Awards:

Unlock the Power of Your Geospatial Data

Is your organisation looking to adopt AI and Computer Vision? Let’ explore together - contact our specialists to start shaping your geospatial future and build a smarter data workflow.